●

●

To All the Books I Published Before

To declare my intentions, the first book I bought for my new imprint, Poseidon Press, was Henry Bean’s 'The Nenoquich.' It was published, on my first list, in 1982. And now, mirabile dictu, 'The Nenoquich' has returned to life.

●

●

●

Jazz Remains the Sound of Modernism

Armstrong and Ellington, Coltrane and Davis, Gillespie and Parker, were central to the same project as other modernists; they reconfigured time and space to craft an alternative way of expression.

●

●

●

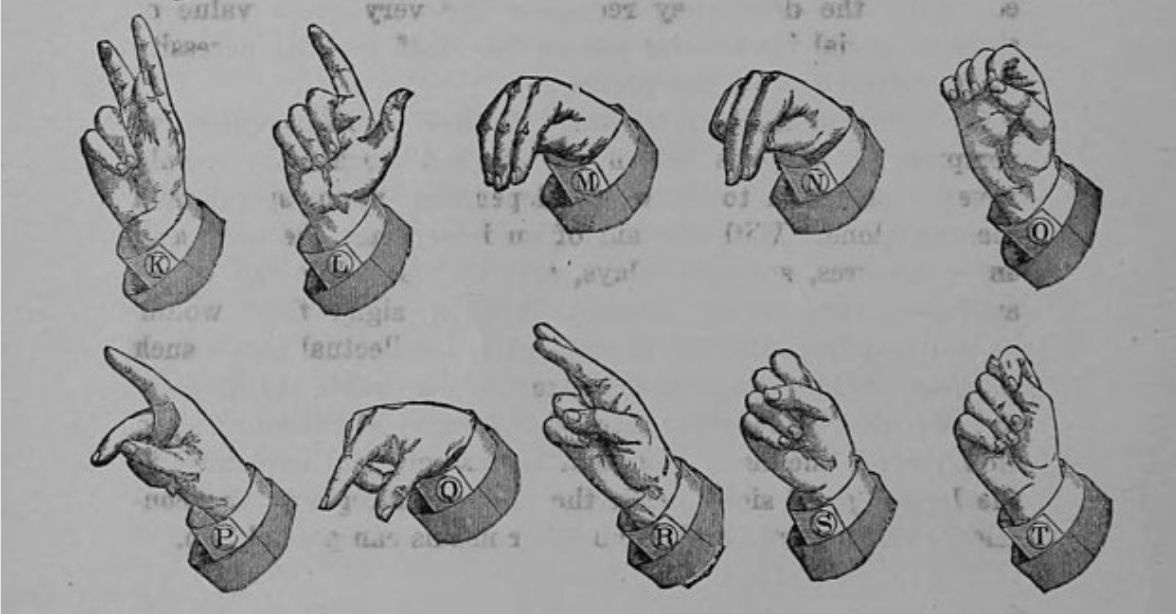

Rise of the Ghost Machines

You’d think two centuries would be long enough for us to sort the singer from the song, to divine where the soul ends and our machines begin. You’d think wrong.

●

●

●

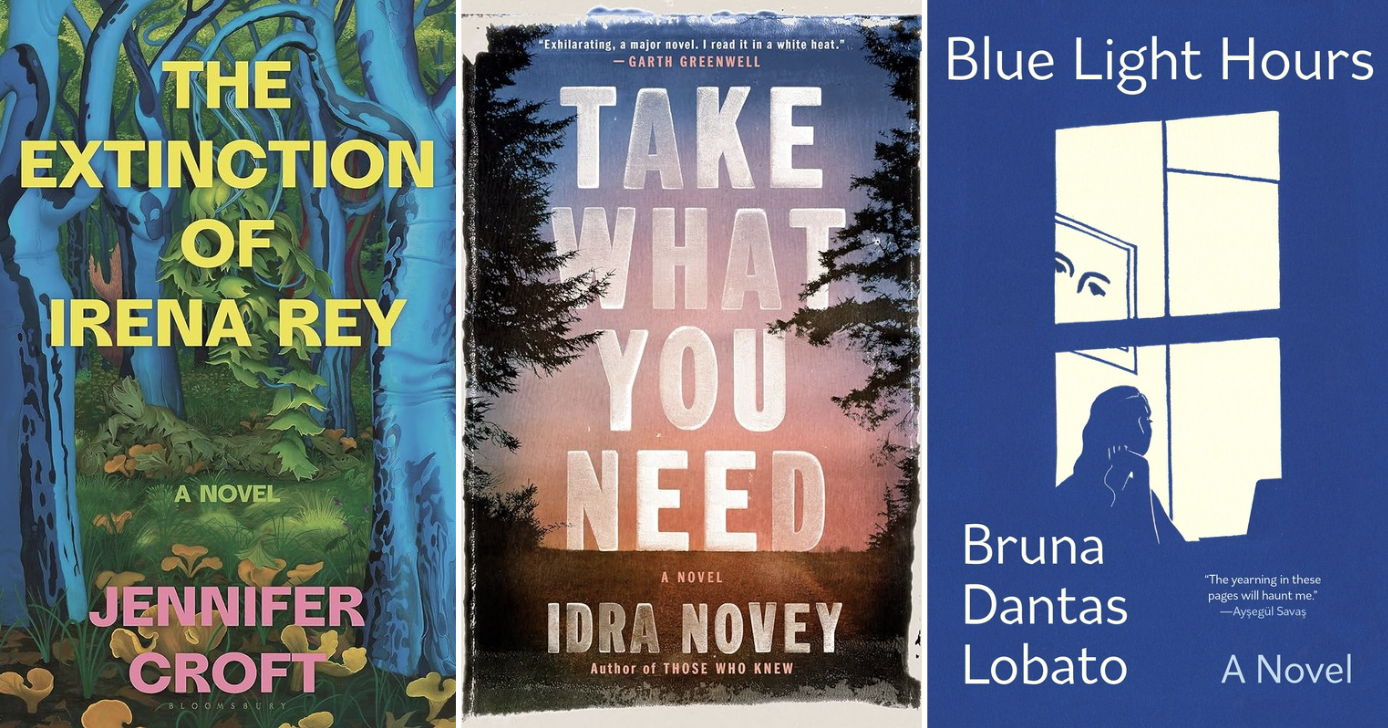

Want to Write Better Fiction? Become a Translator

For K.E. Semmel, Jenny Croft, Idra Novey, and Bruna Dantas Lobato, translation was a crucial training ground for writing fiction.

●

●

●

Against ‘Latin American Literature’

The classification of “Latin American literature” puts both Anglophone and Hispanophone writers in a double-bind.

●

●

●

Hymn for Walpurgisnacht

Walpurgisnacht is a gloaming time when the membrane between the here and the hereafter is more porous.

●

●

●

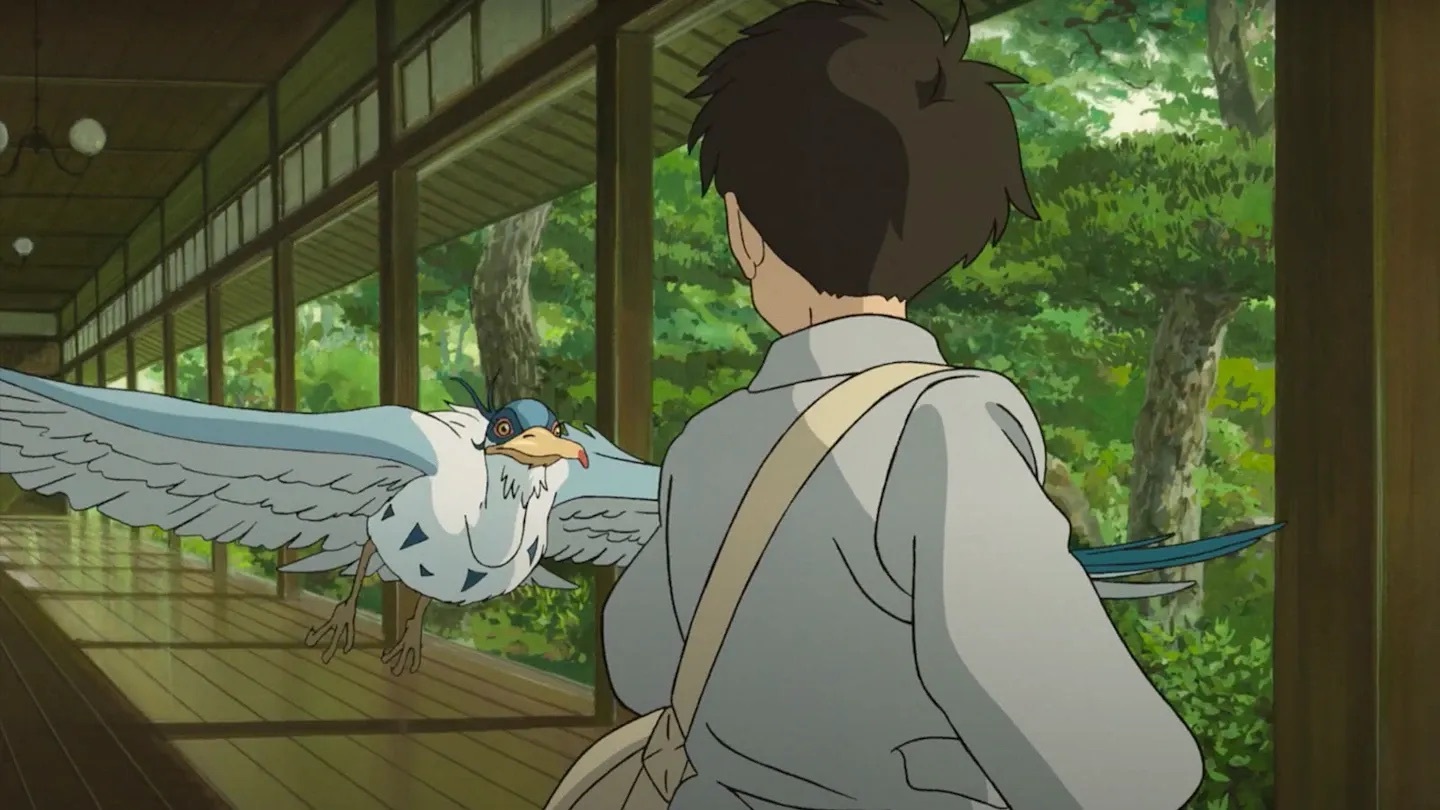

The Other Boy and the Heron

The heron has a robust mythological history across many cultures, and while the meanings differ, many deal with death, rebirth, and transformation.

●

●

●

The Virtue of Slow Writers

The slow writer embraces the protracted and unpredictable timeline, seeing it not as fraught or frustrating but an opportunity for openness and discovery.

●

●

●

Tennis Lessons from David Foster Wallace

I was, and still am, the most reviled type of tennis player.

●

●

●