Today, April 30, marks the twentieth anniversary of my last day in the newsroom of a daily newspaper. In truth, my newspaper career was neither long nor particularly illustrious. For about four years in my early twenties I worked at two small newspapers: the Mill Valley Record, the decades-old weekly newspaper in my hometown that died a few years after I left; and the Aspen Daily News, which, miraculously, remains in business today. Still, I loved the newspaper business. I have never worked with better people than I did in that crazy little newsroom in Aspen, and I probably never will. I quit because it dawned on me that, while I was a good reporter, I had neither the skills nor the intestinal fortitude to follow in the footsteps of my heroes, investigative reporters like Bob Woodward and David Halberstam. What I couldn’t know the day I left the Daily News and began the long trek that led first to graduate school and then to college teaching was the sheer destructive power of the bullet I was dodging.

The Pew Research Center’s “State of the News Media 2012” report offers a sobering portrait of what has happened to print journalism in the twenty years since I left. After a small bump during the Clinton Boom of the 1990s, advertising revenue for America’s newspapers has fallen off a cliff in the past decade, dropping by more than half from a peak of $48.7 billion in 2000 to $23.9 billion in 2011. Thus far at least, online advertising isn’t saving the business as some hoped it might. Online advertising for newspapers was up $207 million between 2010 and 2011, but in that same period, print advertising was down $2.1 billion, meaning print losses outnumbered online gains by a factor of 10-1.

But as troubling as the death of print journalism may be for our collective civic and political lives, it may have an even more lasting impact on our literary culture. For more than a century, newspaper jobs provided vital early paychecks, and even more vital training grounds, for generations of American writers as different as Walt Whitman, Ernest Hemingway, Joyce Maynard, Hunter S. Thompson, and Tony Earley. Just as importantly, reporting jobs taught nonfiction writers from Rachel Carson to Michael Pollan how to ferret out hidden information and present it to readers in a compelling narrative.

Now, though, the infrastructure that helped finance all those literary apprenticeships is fast slipping away. The vacuum left behind by dying print publications has been largely filled by blogs, a few of them, like the Huffington Post and the Daily Beast, connected to huge corporations, many others written by bathrobe-clad auteurs like yours truly. This is great for readers who need only fire up their laptop – or increasingly, their tablet or smartphone – and have instant access to nearly all the information produced in the known world, for free.

But the system’s very efficiency is also its Achilles’ heel. When I worked in newspapers, a good part of my paycheck came from sales of classified ads. That’s all gone now, thanks to Craigslist and eBay. We also were a delivery system for circulars from grocery stores and real estate firms advertising their best deals. Buh-bye. Display ads still exist online, but advertisers are increasingly directing their ad dollars to Google and Facebook, which do a much better job of matching ads to their users’ needs. Add to this the longer-term trend of locally owned grocery stores, restaurants, and clothing shops being replaced by national chains, which draw more business from nationwide TV ad campaigns, and the economic model that supported independent reporting for more than a hundred years has vanished.

Without a way to make a living from their work, most bloggers are hobbyists, and most hobbyists come at their hobby with an angle. So, you have realtor blogs that tout local real estate and inveigh against property taxes. Or you have historical preservation blogs that rail against any new construction. Or you have plain old cranks of the kind who used to hog the open discussion time at the beginning of local city council meetings, but now direct their rants, along with pictures, smart-phone videos, and links to other cranks in other cities, onto the Internet. What you don’t have is a lot of guys like I used to be, who couldn’t care less about the outcome of the events they’re covering, but are being paid a living wage to present them accurately to readers.

The debate over the downsides of the Internet tends to focus on the consumer end, arguing, as Nicholas Carr does in his bestseller, The Shallows, that the Internet is making us dumber. That may or may not be true – I have my doubts – but as we near the close of the second decade of the Internet Era, we may be facing a far greater problem on the producer end: the atrophying of a central skill set necessary to great literature, that of taking off the bathrobe and going out to meet the people you are writing about. I mean to cast no generational aspersions toward the web-savvy writers coming up behind me, but having done both, I can tell you that blogging is nothing like reporting. Just about any fact you can find, or argument you can make, is available online, and with a few clicks of the mouse, anyone can sound like an expert on virtually any subject. And, because so far the blogosphere is, for the great majority of bloggers, quite nearly a pay-free zone, most bloggers are so busy earning a living at their real job, they have no time for old-fashioned shoe leather reporting even if they had the skill set.

The debate over the downsides of the Internet tends to focus on the consumer end, arguing, as Nicholas Carr does in his bestseller, The Shallows, that the Internet is making us dumber. That may or may not be true – I have my doubts – but as we near the close of the second decade of the Internet Era, we may be facing a far greater problem on the producer end: the atrophying of a central skill set necessary to great literature, that of taking off the bathrobe and going out to meet the people you are writing about. I mean to cast no generational aspersions toward the web-savvy writers coming up behind me, but having done both, I can tell you that blogging is nothing like reporting. Just about any fact you can find, or argument you can make, is available online, and with a few clicks of the mouse, anyone can sound like an expert on virtually any subject. And, because so far the blogosphere is, for the great majority of bloggers, quite nearly a pay-free zone, most bloggers are so busy earning a living at their real job, they have no time for old-fashioned shoe leather reporting even if they had the skill set.

But in the main, today’s younger bloggers don’t have those skills, because shoe-leather reporting isn’t all that useful in the Internet age. Reporting is slow. It’s analog. You call people up and talk to them for half an hour. Or you arrange a time to meet and talk for an hour and a half. It can take all day to report a simple human-interest story. To win eyeballs online, you have to be quick and you have to be linked. Read Gawker some time. Or Jezebel. Or even a site like Talking Points Memo. There’s some original reporting there, but more common are riffs on news stories or memes created by somebody else, often as not from television or the so-called “dead-tree media.” When there is an original piece online, often it comes from an author flacking for another, paying gig – a book, a business venture, a weight-loss program, a political career.

Clay Shirky, the NYU media studies professor and author of Here Comes Everybody, has suggested the crumbling of economic support for traditional print media and the original reporting it engendered is a temporary stage in the healthy process of creative destruction that goes along with the advent of any new game-changing technology. “The old stuff gets broken faster than the new stuff is put in its place,” Shirky is quoted as saying in The Pew Center’s “State of the News Media 2010” report.

Clay Shirky, the NYU media studies professor and author of Here Comes Everybody, has suggested the crumbling of economic support for traditional print media and the original reporting it engendered is a temporary stage in the healthy process of creative destruction that goes along with the advent of any new game-changing technology. “The old stuff gets broken faster than the new stuff is put in its place,” Shirky is quoted as saying in The Pew Center’s “State of the News Media 2010” report.

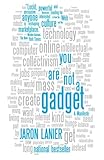

Maybe Shirky is right and online news sites will discover an economic model to replace the classified pages and grocery-store ads, but as virtual reality pioneer Jaron Lanier points out in You Are Not A Gadget, we’ve been waiting a long time for the destruction to start getting creative. Lanier, who is more interested in music than writing, argues that for all the digi-vangelism about the waves of creativity that would follow the advent of musical file-sharing, what has happened so far is that music has gotten stuck in a self-reinforcing loop of sampling and imitation that has led to cultural stasis. “Where is the new music?” he asks. “Everything is retro, retro, retro.”

Lanier writes:

I have frequently gone through a conversational sequence along the following lines: Someone in his early twenties will tell me I don’t know what I’m talking about, and then I’ll challenge that person to play me some music that is characteristic of the late 2000s as opposed to the late 1990s. I’ll ask him to play the track for his friends. So far, my theory has held: even true fans don’t seem to be able to tell if an indie rock track or a dance mix is from 1998 or 2008, for instance.

I am certainly not the go-to guy on contemporary music, but, like Lanier, I fear we are creating a generation of riff artists, who see their job not as creating wholly new original projects but as commenting upon cultural artifacts that already exist. Whether you’re talking about rappers endlessly “sampling” the musical hooks of their forebears, or bloggers snarking about the YouTube video of Miami Heat star Shaquille O’Neal holding his nose on the bench after one of his teammates farted during the first quarter of a game against the Chicago Bulls, you are seeing a culture, as Lanier puts it, “effectively eating its own seed stock.”

Thus far this cultural Möbius strip hasn’t affected books to the same degree that it has the news media and music because, well, authors of printed books still get paid for having original ideas. (If you wonder why cyber evangelists like Clay Shirky keep writing books and magazine articles printed on dead trees, there’s your answer. Writing a book is a paid gig. Blogging is effectively a charitable donation to the cultural conversation, made in the hope that one’s donation will pay off in some other sphere, like, say, getting a book contract.) The recent U.S. government suit against Apple and book publishers over alleged price-fixing in the e-book market, which would allow Amazon to keep deeply discounting books to drive Kindle sales, suggests that authors can’t necessarily count on making a living from writing books forever. But even if by some miracle, books continue to hold their economic value as they move into the digital realm, the people who write them will still need a way to make a living – and just as importantly, learn how to observe and describe the world beyond their laptop screen – in the decade or so it takes a writer to arrive at a mature and original vision.

Try to imagine what would have become of Hemingway, that shell-shocked World War I vet, if he hadn’t found work on the Kansas City Star, and later, the job as a foreign correspondent for the Toronto Star that allowed him to move to Paris and raise a family. The same goes for a writer as radically different as Hunter S. Thompson, who was saved from a life of dissipation by an early job as a sportswriter for a local paper, which led to newspaper gigs in New York and Puerto Rico. All of his best books began as paid reporting assignments, and his genius, short-lived as it was, was to be able to report objectively on the madness going on inside his drug-addled head.

In 2012, we live in a bit of a false economy in that novelists and nonfiction writers in their thirties and forties are still just old enough to have begun their careers before content began to migrate online. Thus, we can thank magazines for training and paying John Jeremiah Sullivan, whose book of essays, Pulphead, consists largely of pieces written on assignment for GQ and Harper’s. We should also be thankful for Gourmet magazine, which, until it went under in 2009, sent novelist Ann Patchett on lavish, all-expenses-paid trips around the world, including one to Italy, where she did the research on opera singers that fueled her bestselling novel, Bel Canto. In a quirkier, but no less important way, we can thank glossy magazines for The Corrections by Jonathan Franzen, who supported himself by writing for Harper’s, The New Yorker, and Details during his long, dark night of the literary soul in the late 1990s before his breakout novel was published.

In 2012, we live in a bit of a false economy in that novelists and nonfiction writers in their thirties and forties are still just old enough to have begun their careers before content began to migrate online. Thus, we can thank magazines for training and paying John Jeremiah Sullivan, whose book of essays, Pulphead, consists largely of pieces written on assignment for GQ and Harper’s. We should also be thankful for Gourmet magazine, which, until it went under in 2009, sent novelist Ann Patchett on lavish, all-expenses-paid trips around the world, including one to Italy, where she did the research on opera singers that fueled her bestselling novel, Bel Canto. In a quirkier, but no less important way, we can thank glossy magazines for The Corrections by Jonathan Franzen, who supported himself by writing for Harper’s, The New Yorker, and Details during his long, dark night of the literary soul in the late 1990s before his breakout novel was published.

Those venues – most of them, anyway – still exist, but they are the top of the publishing heap, and the smaller, entry-level publications of the kind I worked for twenty years ago, are either dying or going online. Increasingly, my decision to leave journalism to enter an MFA program twenty years ago seems less a personal life choice than an act guided by very subtle, yet very powerful economic incentives. As paying gigs for apprentice writers continue to dwindle, apprentice writers are making the obvious economic choice and entering grad school, which, whatever its merits as a writing training program, at least has the benefit of possibly leading to a real, paying job – as a teacher of creative writing, which, as you may have noticed, is what most working literary writers do for a living these days.

Perhaps that is what people are really saying when they talk about the “MFA aesthetic,” that insular, navel-gazing style that has more to do with a response to previous works of fiction than to the world most non-writers live in. Perhaps the problem isn’t with MFA programs at all, but with the fact that, for most graduates of MFA programs, it’s the only training in writing they have. They haven’t done what any rookie reporter at any local newspaper has done, which is observed a scene – a city council meeting, a high school football game, a small-plane crash – and then written about it on the front page of a paper that everybody involved in that scene will read the next day. They haven’t had to sift through a complex, shifting set of facts – was that plane crash a result of equipment malfunction or pilot error? – and not only get the story right, but make it compelling to readers, all under deadline as the editor and a row of surly press guys are standing around waiting to fill that last hole on page one. They haven’t, in short, had to write, quickly, under pressure, for an audience, with their livelihood on the line.

It is, of course, pointless to rage against the Internet Era. For one thing, it is already here, and for another, the Web is, on balance, a pretty darn good thing. I love email and online news. I use Wikipedia every day. But we need to listen to what the Jaron Laniers of the world are saying, which is that we can choose what the Web of the future will look like. The Internet is not like the weather. It isn’t something that just happens to us. The Internet is merely a very powerful tool, one we can shape to our collective will, and the first step along that path is deciding what we value and being willing to pay for it.

Image via Wikimedia Commons